使用 Prometheus 在 kubesphere 上监控 kubeedge 边缘节点(Jetson) CPU、GPU 状态

KubeSphere 边缘节点的可观测性

KubeSphere 愿景是打造一个以 Kubernetes 为内核的云原生分布式操作系统,它的架构可以非常方便地使第三方应用与云原生生态组件进行即插即用(plug-and-play)的集成。

在边缘计算场景下,KubeSphere 基于 KubeEdge 实现应用与工作负载在云端与边缘节点的统一分发与管理,解决在海量边、端设备上完成应用交付、运维、管控的需求。

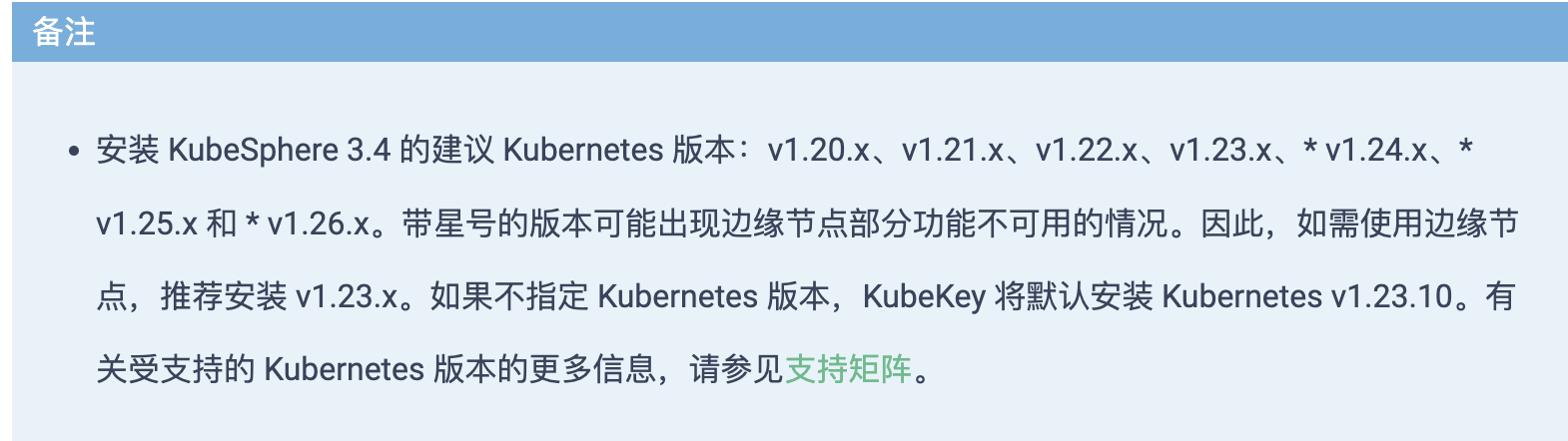

根据 KubeSphere 的支持矩阵,只有 1.23.x 版本的 k8s 支持边缘计算,而且 KubeSphere 界面也没有边缘节点资源使用率等监控信息的显示。

本文基于 KubeSphere 和 KubeEdge 构建云边一体化计算平台,通过Prometheus 来监控 Nvidia Jetson 边缘设备状态,实现 KubeSphere 在边缘节点的可观测性。

| 组件 | 版本 |

|---|---|

| KubeSphere | 3.4.1 |

| containerd | 1.7.2 |

| k8s | 1.26.0 |

| KubeEdge | 1.15.1 |

| Jetson型号 | NVIDIA Jetson Xavier NX (16GB ram) |

| Jtop | 4.2.7 |

| JetPack | 5.1.3-b29 |

| Docker | 24.0.5 |

部署 k8s 环境

参考 kubesphere 部署文档。通过 KubeKey 可以快速部署一套 k8s 集群。

|

|

部署 KubeEdge 环境

参考 在 KubeSphere 上部署最新版的 KubeEdge,部署 KubeEdge。

开启边缘节点日志查询功能

-

vim /etc/kubeedge/config/edgecore.yaml

-

enable=true

开启后,可以方便查询 pod 日志,定位问题。

修改 kubesphere 配置(KubeEdge < 1.17.0)

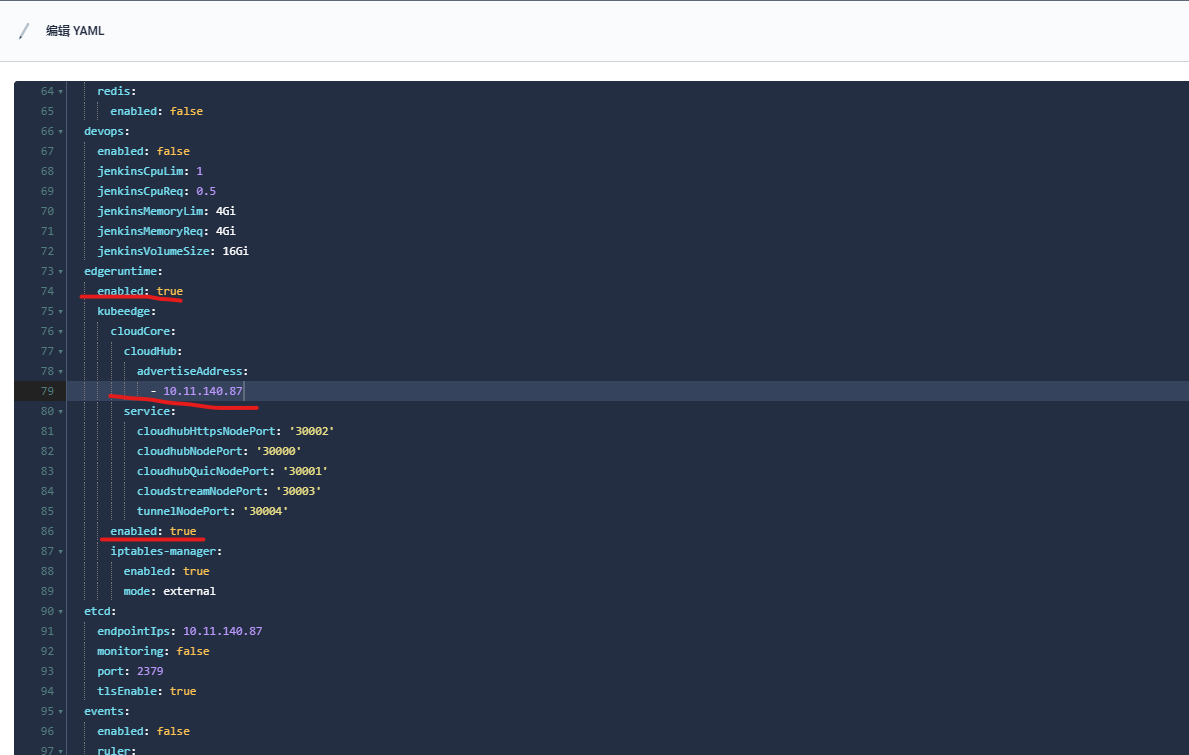

1.开启 kubeedge 边缘节点插件

- 修改 configmap–ClusterConfiguration

- advertiseAddress 设置为 cloudhub 所在的物理机地址

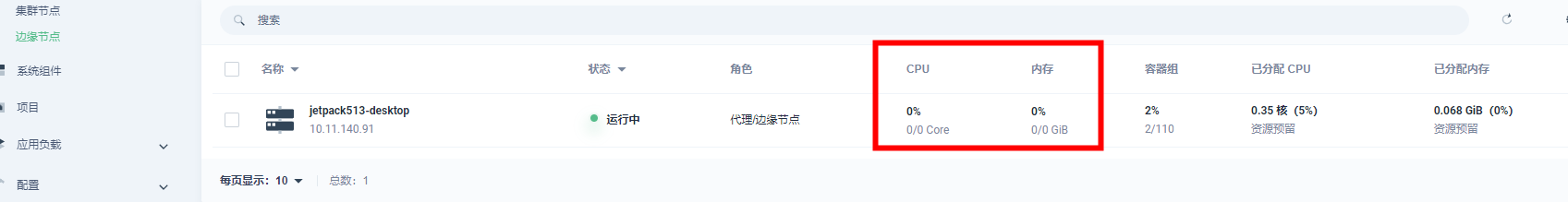

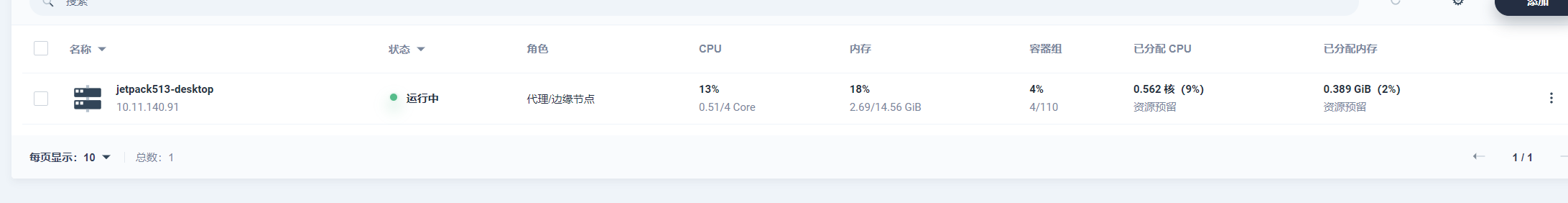

修改完 发现可以显示边缘节点,但是没有 CPU 和 内存信息,发现边缘节点没有 node-exporter 这个pod。

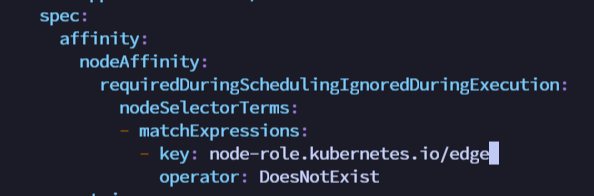

2. 修改 node-exporter 亲和性

kubectl get ds -n kubesphere-monitoring-system 发现 不会部署到边缘节点上

修改为:

|

|

node-exporter 是部署在边缘节点上了,但是 pods 起不来。

kubecrl edit 该失败的pod,发现是其中的 kube-rbac-proxy 这个 container 启动失败,看这个 container 的logs。发现是 kube-rbac-proxy 想要获取 KUBERNETES_SERVICE_HOST 和 KUBERNETES_SERVICE_PORT 这两个环境变量,但是获取失败,所以启动失败。

在 K8S 的集群中,当创建 pod 时,会在pod中增加 KUBERNETES_SERVICE_HOST 和 KUBERNETES_SERVICE_PORT 这两个环境变量,用于 pod 内的进程对 kube-apiserver 的访问,但是在 KubeEdge 的 edge 节点上创建的 pod 中,这两个环境变量存在,但它是空的。

和华为 KubeEdge 的社区同学咨询,KubeEdge 1.17版本将会增加这两个环境变量的设置。KubeEdge 社区 proposals 链接。

另一方面,推荐安装 edgemesh,安装之后在 edge 的 pod 上就可以访问 kubernetes.default.svc.cluster.local:443 了。

3. edgemesh部署

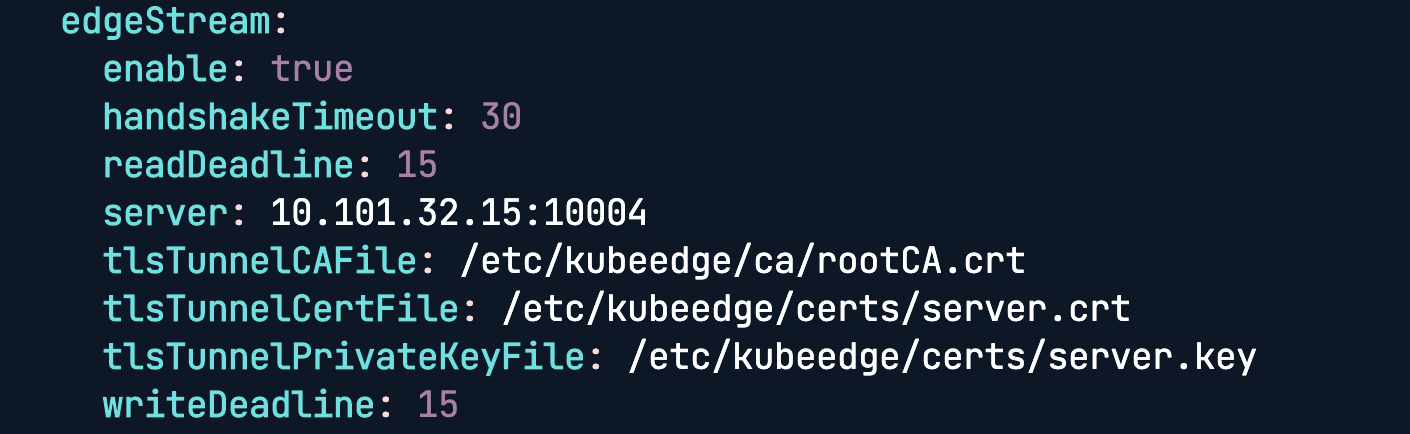

-

配置 cloudcore configmap

kubectl edit cm cloudcore -n kubeedge设置 dynamicController=true.修改完 重启 cloudcore

kubectl delete pod cloudcore-776ffcbbb9-s6ff8 -n kubeedge -

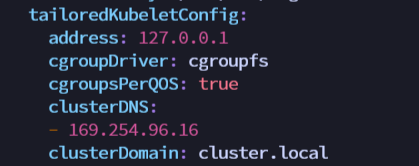

配置 edgecore 模块,配置 metaServer=true 和 clusterDNS

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21$ vim /etc/kubeedge/config/edgecore.yaml modules: ... metaManager: metaServer: enable: true //配置这里 ... modules: ... edged: ... tailoredKubeletConfig: ... clusterDNS: //配置这里 - 169.254.96.16 ... //重启edgecore $ systemctl restart edgecore

修改完 验证是否修改成功

1 2 3$ curl 127.0.0.1:10550/api/v1/services {"apiVersion":"v1","items":[{"apiVersion":"v1","kind":"Service","metadata":{"creationTimestamp":"2021-04-14T06:30:05Z","labels":{"component":"apiserver","provider":"kubernetes"},"name":"kubernetes","namespace":"default","resourceVersion":"147","selfLink":"default/services/kubernetes","uid":"55eeebea-08cf-4d1a-8b04-e85f8ae112a9"},"spec":{"clusterIP":"10.96.0.1","ports":[{"name":"https","port":443,"protocol":"TCP","targetPort":6443}],"sessionAffinity":"None","type":"ClusterIP"},"status":{"loadBalancer":{}}},{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{"prometheus.io/port":"9153","prometheus.io/scrape":"true"},"creationTimestamp":"2021-04-14T06:30:07Z","labels":{"k8s-app":"kube-dns","kubernetes.io/cluster-service":"true","kubernetes.io/name":"KubeDNS"},"name":"kube-dns","namespace":"kube-system","resourceVersion":"203","selfLink":"kube-system/services/kube-dns","uid":"c221ac20-cbfa-406b-812a-c44b9d82d6dc"},"spec":{"clusterIP":"10.96.0.10","ports":[{"name":"dns","port":53,"protocol":"UDP","targetPort":53},{"name":"dns-tcp","port":53,"protocol":"TCP","targetPort":53},{"name":"metrics","port":9153,"protocol":"TCP","targetPort":9153}],"selector":{"k8s-app":"kube-dns"},"sessionAffinity":"None","type":"ClusterIP"},"status":{"loadBalancer":{}}}],"kind":"ServiceList","metadata":{"resourceVersion":"377360","selfLink":"/api/v1/services"}}-

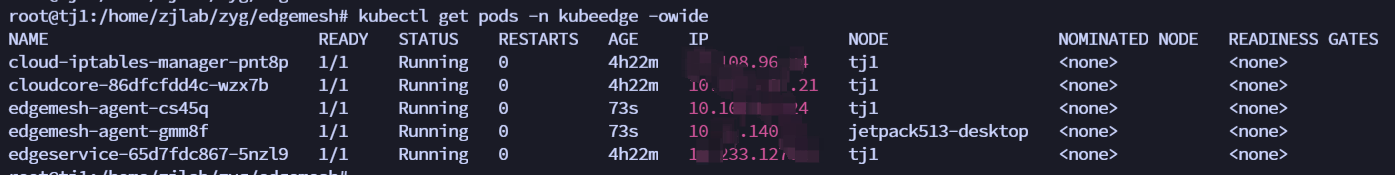

安装 edgemesh

1 2 3 4 5 6git clone https://github.com/kubeedge/edgemesh.git cd edgemesh kubectl apply -f build/crds/istio/ kubectl apply -f build/agent/resources/

-

4. 修改dnsPolicy

edgemesh部署完成后,edge节点上的node-exporter中的两个境变量还是空的,也无法访问kubernetes.default.svc.cluster.local:443,原因是该pod中的dns服务器配置错误,应该是169.254.96.16的,但是却是跟宿主机一样的dns配置。

|

|

将dnsPolicy修改为ClusterFirstWithHostNet,之后重启node-exporter,dns的配置正确

kubectl edit ds node-exporter -n kubesphere-monitoring-system

dnsPolicy: ClusterFirstWithHostNet

hostNetwork: true

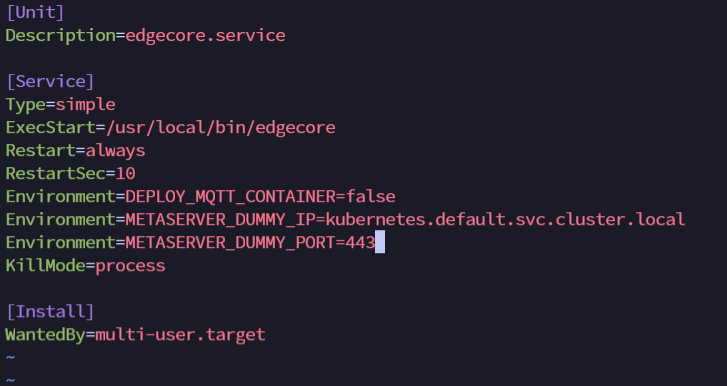

5. 添加环境变量

vim /etc/systemd/system/edgecore.service

|

|

修改完重启 edgecore

|

|

node-exporter 变成 running!!!!

在边缘节点 curl http://127.0.0.1:9100/metrics 可以发现 采集到了边缘节点的数据。

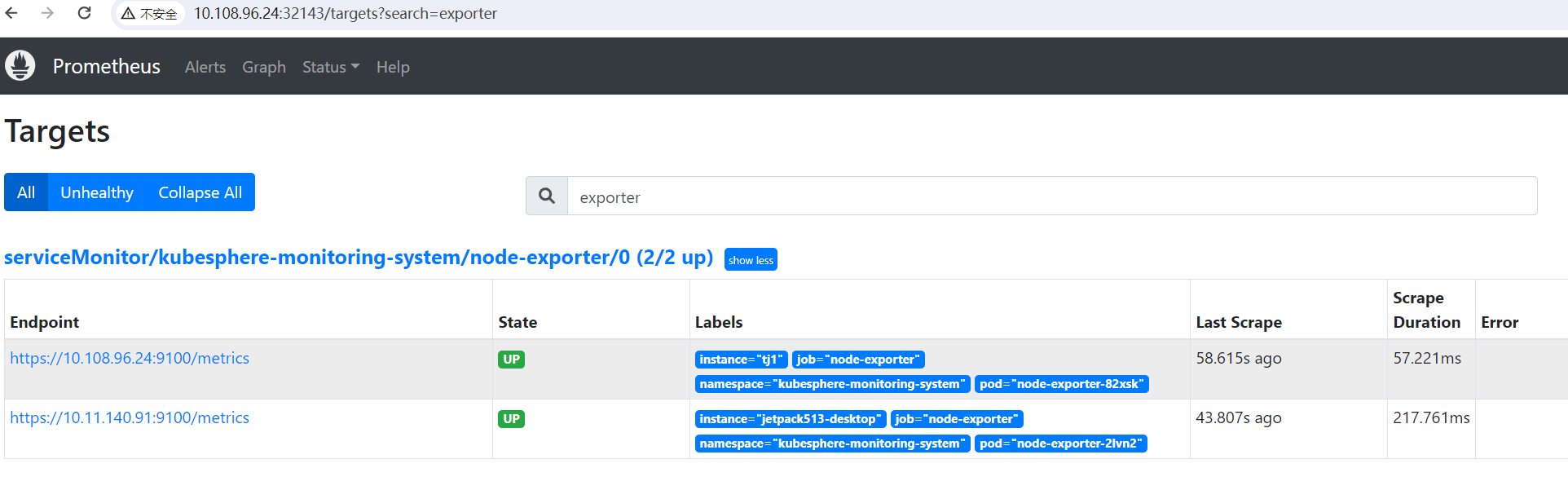

最后我们可以将 kubesphere 的 k8s 服务通过 NodePort 暴露出来。就可以在页面查看 。

|

|

通过访问 master IP + 32143 端口,就可以访问 边缘节点 node-exporter 数据。

然后界面上也出现了 CPU 和 内存的信息。

KubeEdge = 1.17.0

开启 kubeedge 边缘节点插件 和 修改 node-exporter 亲和性 和 KubeEdge < 1.17.0 一样

部署 1.17.0版本注意,需要支持边缘 Pods 使用 InClusterConfig 访问 Kube-APIServer ,所以要配置指定 cloudCore.featureGates.requireAuthorization=true 以及 cloudCore.modules.dynamicController.enable=true。 详情可以查看 KubeEdge 公众号文章

|

|

启动 EdgeCore 后,按如下修改 edgecore.yaml 后重启 EdgeCore。

修改 metaServer.enable = true 同时增加 featureGates: requireAuthorization: true

|

|

修改完重启 edgecore

|

|

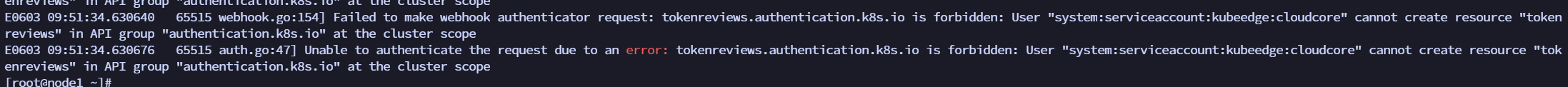

创建 clusterrolebinding

发现 node-exporter 里面的容器报错:Unable to authenticate the request due to an error: tokenreviews.authentication.k8s.io is forbidden: User "system:serviceaccount:kubeedge:cloudcore" cannot create resource "tokenreviews" in API group "authentication.k8s.io" at the cluster scope

因为 cloudcore 没有权限,所以创建一个 clusterrolebinding

|

|

创建完 clusterrolebinding 就可以查询到边缘节点的监控信息了。

搞定了 CPU 和 内存,接下来就是 GPU了。

监控 Jetson GPU 状态

安装 Jtop

首先 Jetson 是一个 arm 设备,所以无法运行 nvidia-smi ,需要安装 Jtop。

|

|

安装 jetson GPU Exporter

参考博客,制作 jetson GPU Exporter 镜像,并且对应的 grafana 仪表盘都有。

Dockerfile

|

|

jetson_stats_prometheus_collector.py 代码

|

|

记得给jetson的板子打标签,确保gpu的exporter在jetson上执行。否则在其他node上执行会因为采集不到数据而报错.

kubectl label node edge-wpx machine.type=jetson

新建 kubesphere 资源

新建ServiceAccount、DaemonSet、Service、servicemonitor,目的是将 jetson-exporter 采集到的数据提供给 kubesphere 的 prometheus。

|

|

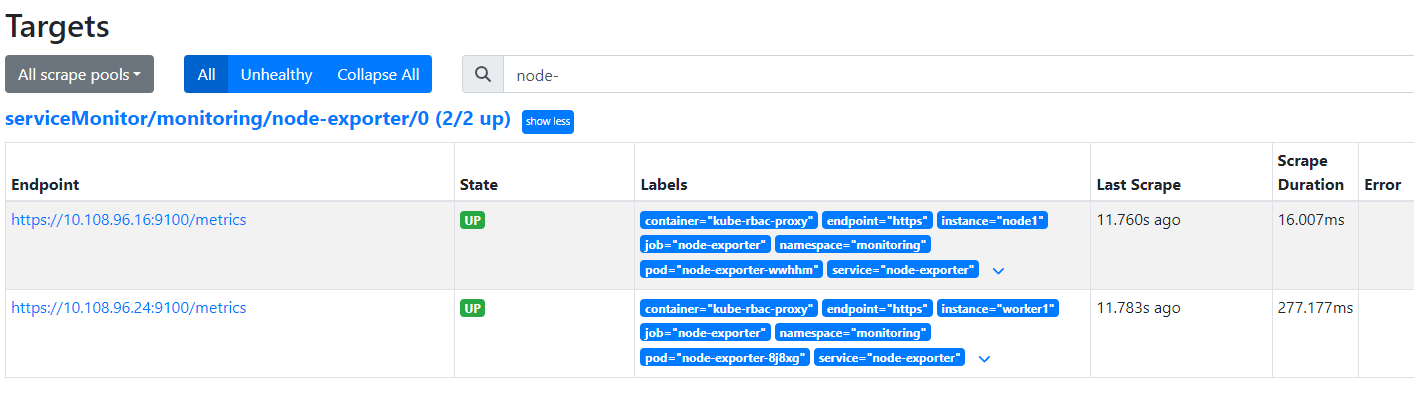

部署完成后,jetson-exporter pod running

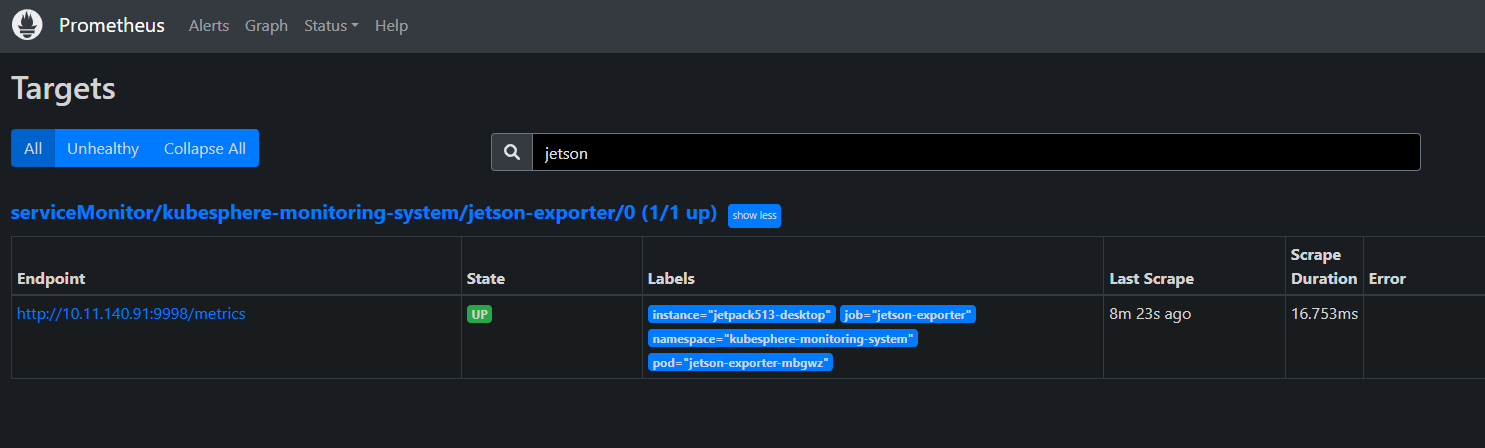

重启prometheus pod,重新加载配置后,可以在prometheus界面看到新增加的gpu exporter的target

|

|

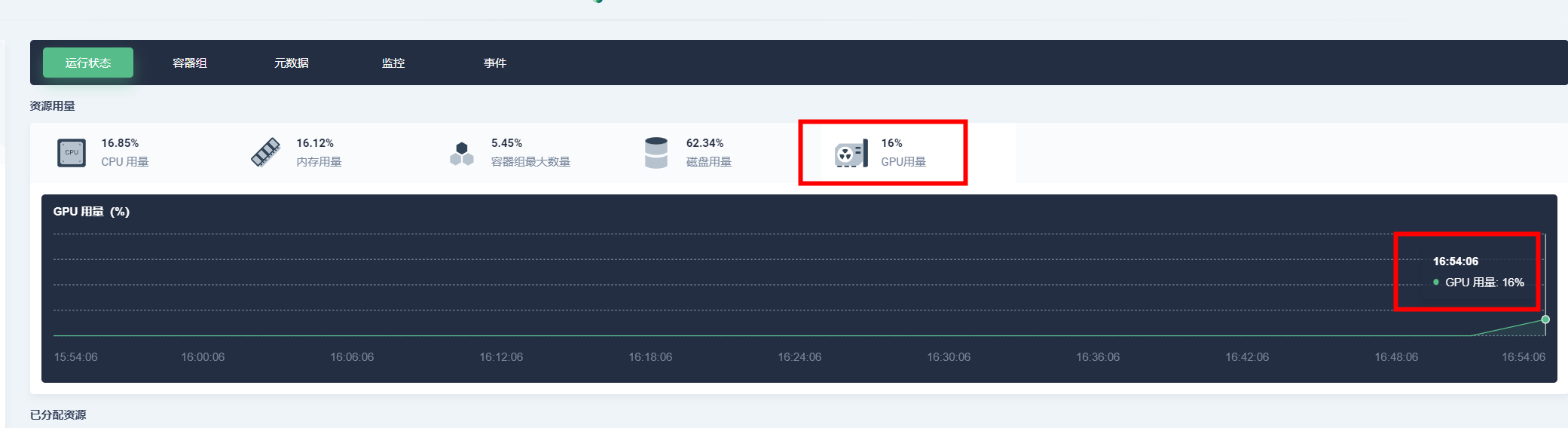

在 kubesphere 前端,查看 GPU 监控数据

前端需要修改 kubesphere 的 console 的代码,这里属于前端内容,这里就不详细说明了。

其次将prometheus的svc端口暴露出来,通过nodeport的方式将prometheus的端口暴露出来,前端通过 http 接口来查询 GPU 的状态。

|

|

http 接口

|

|

这样就成功在 kubesphere ,监控 kubeedge 边缘节点 Jetson 的 GPU 状态了。

总结

基于 KubeEdge ,我们在 KubeSphere 的前端界面上实现了边缘设备的可观测性,包括 GPU 信息的可观测性。

对于边缘节点 CPU、内存状态的监控,首先修改亲和性,让 KubeSphere 自带的 node-exporter 能够采集边缘节点监控数据,接下来利用 KubeEdge 的 edgemesh 将采集的数据提供给 KubeSphere 的 prometheus 。这样就实现了CPU、内存信息的监控。

对于边缘节点 GPU 状态的监控,安装 jtop 获取 GPU 使用率,温度等数据,然后开发 jetson GPU Exporter 将获取 jtop 获取的信息发送给 KubeSphere 的 prometheus,通过修改 KubeSphere 前端 ks-console 的代码,在界面上通过 http 接口获取 prometheus 数据,这样就实现了 GPU 使用率等监控信息。