1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

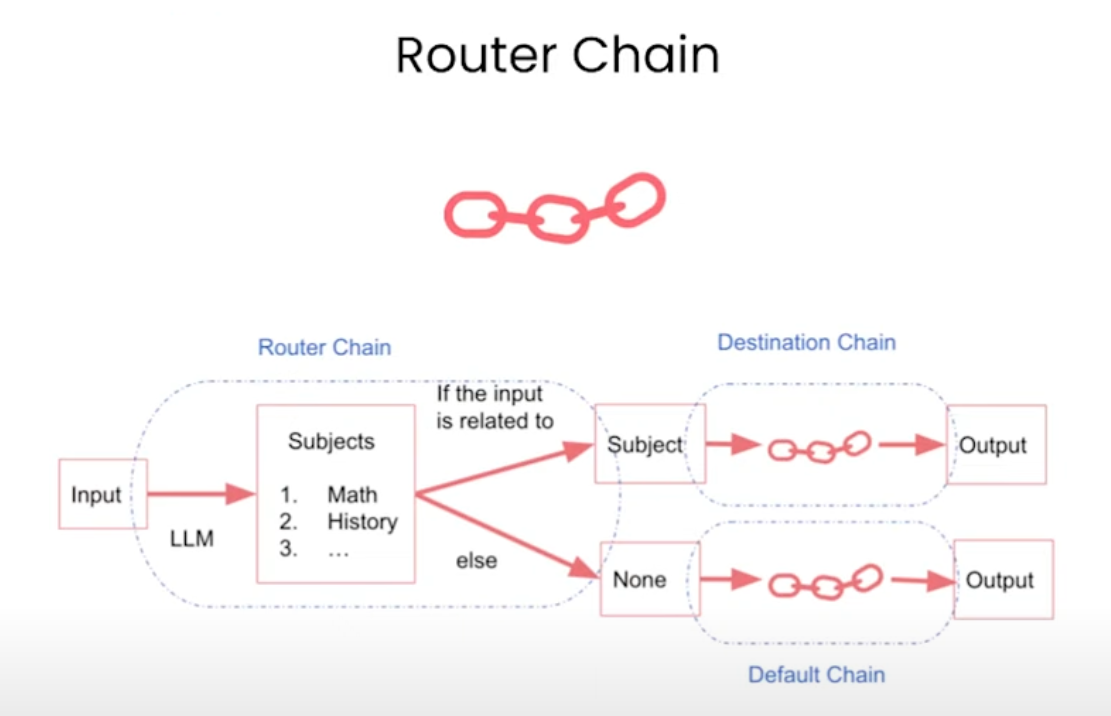

|

physics_template = """You are a very smart physics professor. \

You are great at answering questions about physics in a concise\

and easy to understand manner. \

When you don't know the answer to a question you admit\

that you don't know.

Here is a question:

{input}"""

math_template = """You are a very good mathematician. \

You are great at answering math questions. \

You are so good because you are able to break down \

hard problems into their component parts,

answer the component parts, and then put them together\

to answer the broader question.

Here is a question:

{input}"""

history_template = """You are a very good historian. \

You have an excellent knowledge of and understanding of people,\

events and contexts from a range of historical periods. \

You have the ability to think, reflect, debate, discuss and \

evaluate the past. You have a respect for historical evidence\

and the ability to make use of it to support your explanations \

and judgements.

Here is a question:

{input}"""

computerscience_template = """ You are a successful computer scientist.\

You have a passion for creativity, collaboration,\

forward-thinking, confidence, strong problem-solving capabilities,\

understanding of theories and algorithms, and excellent communication \

skills. You are great at answering coding questions. \

You are so good because you know how to solve a problem by \

describing the solution in imperative steps \

that a machine can easily interpret and you know how to \

choose a solution that has a good balance between \

time complexity and space complexity.

Here is a question:

{input}"""

prompt_infos = [

{

"name": "physics",

"description": "Good for answering questions about physics",

"prompt_template": physics_template

},

{

"name": "math",

"description": "Good for answering math questions",

"prompt_template": math_template

},

{

"name": "History",

"description": "Good for answering history questions",

"prompt_template": history_template

},

{

"name": "computer science",

"description": "Good for answering computer science questions",

"prompt_template": computerscience_template

}

]

from langchain.chains.router import MultiPromptChain

from langchain.chains.router.llm_router import LLMRouterChain,RouterOutputParser

from langchain.prompts import PromptTemplate

destination_chains = {}

for p_info in prompt_infos:

name = p_info["name"]

prompt_template = p_info["prompt_template"]

prompt = ChatPromptTemplate.from_template(template=prompt_template)

chain = LLMChain(llm=model, prompt=prompt)

destination_chains[name] = chain

destinations = [f"{p['name']}: {p['description']}" for p in prompt_infos]

destinations_str = "\n".join(destinations)

default_prompt = ChatPromptTemplate.from_template("{input}")

default_chain = LLMChain(llm=model, prompt=default_prompt)

MULTI_PROMPT_ROUTER_TEMPLATE = """Given a raw text input to a \

language model select the model prompt best suited for the input. \

You will be given the names of the available prompts and a \

description of what the prompt is best suited for. \

You may also revise the original input if you think that revising\

it will ultimately lead to a better response from the language model.

<< FORMATTING >>

Return a markdown code snippet with a JSON object formatted to look like:

```json

{{{{

"destination": string \ name of the prompt to use or "DEFAULT"

"next_inputs": string \ a potentially modified version of the original input

}}}}

```

REMEMBER: "destination" MUST be one of the candidate prompt \

names specified below OR it can be "DEFAULT" if the input is not\

well suited for any of the candidate prompts.

REMEMBER: "next_inputs" can just be the original input \

if you don't think any modifications are needed.

<< CANDIDATE PROMPTS >>

{destinations}

<< INPUT >>

{{input}}

<< OUTPUT (remember to include the ```json)>>"""

router_template = MULTI_PROMPT_ROUTER_TEMPLATE.format(

destinations=destinations_str

)

router_prompt = PromptTemplate(

template=router_template,

input_variables=["input"],

output_parser=RouterOutputParser(),

)

router_chain = LLMRouterChain.from_llm(model, router_prompt)

chain = MultiPromptChain(router_chain=router_chain,

destination_chains=destination_chains,

default_chain=default_chain, verbose=True

)

r = chain.run("What is black body radiation?")

print(r)

|